25. Voting

Voting

Ensemble Voting is a widely-used ensemble learning technique where multiple models (or "base learners") independently make predictions, and a final decision is made by combining these predictions. This approach enhances model accuracy, robustness, and interpretability by leveraging the strengths of each base learner.

Types of Ensemble Voting Methods

There are several types of ensemble voting techniques commonly used in machine learning:

-

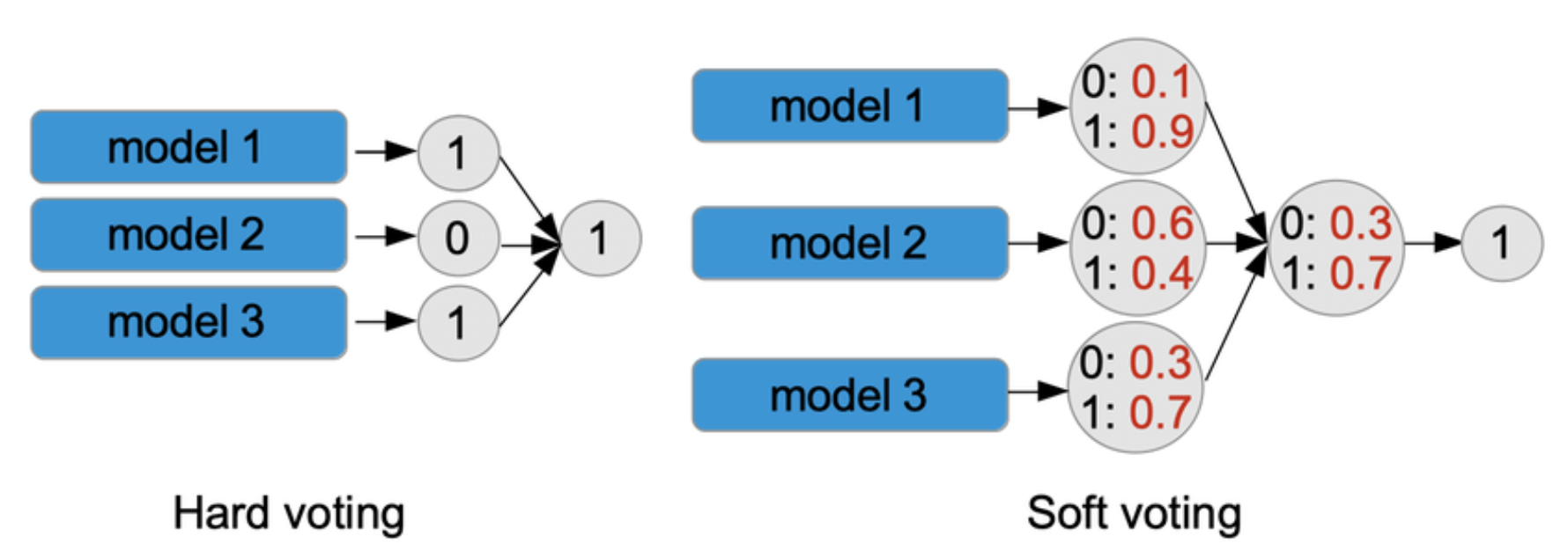

Majority (Hard) Voting: Each model in the ensemble makes a categorical prediction (class label), and the class label receiving the majority of votes is chosen as the final prediction. Majority voting is particularly effective when individual models are accurate and diverse. In cases where votes are tied, tie-breaking rules (e.g., selecting the class with the highest confidence) can be applied.

-

Weighted Voting: In this method, different weights are assigned to each model’s prediction based on its performance or reliability. Each model’s prediction is multiplied by its corresponding weight, and the final decision is determined by summing or averaging these weighted predictions. Weights can be manually assigned or optimized through techniques like cross-validation.

-

Soft Voting: Soft voting is used when models provide probability estimates or confidence scores for each class label instead of discrete predictions. The predicted probabilities from each model are averaged, and the class with the highest average probability is selected as the final prediction. This approach allows models to incorporate their confidence levels in the final decision.

-

Voting Regressor: Ensemble voting can also be applied to regression tasks, where each base model outputs a numerical prediction. In this case, the final output is typically the average of all predictions, which helps reduce variance and improves stability.

Theoretical Advantages of Ensemble Voting

Ensemble voting provides several advantages over individual models:

-

Improved Accuracy: By combining the predictions of multiple models, ensemble voting often achieves higher accuracy than any single model, as it reduces both bias and variance.

-

Robustness: Voting makes the model more robust to errors or noise in individual models. It mitigates the impact of inaccurate predictions from any single model by considering multiple viewpoints.

-

Model Diversity and Complementary Strengths: Ensemble voting allows for the combination of various types of models, each with unique strengths and weaknesses. This diversity enables the ensemble to capture complementary patterns and features in the data, enhancing overall performance.

-

Interpretability: Voting provides insights into the importance and agreement among different models. By analyzing the consistency of predictions, ensemble voting aids in understanding the relative contributions of each model, making it easier to interpret the ensemble’s decision-making process.

Applications of Ensemble Voting in Machine Learning

Ensemble voting is widely used in practice, particularly in fields like finance, healthcare, and computer vision. Applications include:

-

Voting Classifier: Commonly used in classification tasks, such as fraud detection and medical diagnosis, where combining multiple classifiers improves prediction accuracy.

-

Voting Regressor: Useful in regression tasks, such as predictive analytics in finance or weather forecasting, where averaging predictions from multiple regressors reduces prediction error.

-

Random Forests and Bagging: Random Forests and bagging methods rely on voting mechanisms (usually hard voting) across multiple decision trees to achieve robust classification and regression performance.

Assumptions and Limitations of Ensemble Voting

While ensemble voting provides substantial benefits, there are important considerations:

-

Model Independence and Diversity: Ensemble voting assumes that base models are independent and diverse in terms of algorithm, hyperparameters, training data, or feature representations. Greater diversity among models enhances accuracy and robustness.

-

Computational Complexity: Ensemble voting can be computationally expensive due to the need to train and maintain multiple models, which may require additional resources for storage and processing.

-

Correlated Models: If the base models are highly correlated (i.e., make similar errors), the ensemble’s effectiveness diminishes, as the benefits of diversity are reduced.

Conclusion Ensemble voting is a powerful technique in machine learning that combines multiple models to improve prediction accuracy, robustness, and interpretability. Different types of voting (e.g., majority voting, weighted voting, and soft voting) cater to different needs, and the choice depends on the problem’s requirements. With applications across various domains, ensemble voting remains a versatile tool for enhancing machine learning models.