28. Boosting

Boosting

History of Boosting

Boosting is a powerful ensemble technique in machine learning aimed at reducing both bias and variance by sequentially combining multiple weak learners to create a strong learner. The concept emerged from the question posed by Kearns and Valiant in the late 1980s: "Can a set of weak learners create a single strong learner?" Robert Schapire answered affirmatively in 1990, proving that weak learners could indeed be converted into a strong learner, laying the foundation for boosting.

Schapire and Freund later developed the Adaptive Resampling and Combining (Arcing) technique, which became synonymous with boosting. They introduced AdaBoost (Adaptive Boosting), one of the earliest and most impactful boosting algorithms, which iteratively focused on misclassified examples to improve accuracy. This innovation led to further developments, including Gradient Boosting and XGBoost, which are widely used today. Boosting has become essential in machine learning, offering solutions for complex tasks with high predictive accuracy.

Overview Boosting is an ensemble learning approach primarily used in supervised learning. It creates a sequence of models, each of which attempts to correct the errors made by the previous models. Boosting is particularly useful in scenarios where reducing bias is essential, such as classification tasks.

Unlike bagging, which averages predictions, boosting adds the outputs of the weak learners sequentially, focusing on the most challenging cases at each step.

Sequential Learning

Boosting follows a sequential learning approach, where each model is trained to correct the errors of its predecessor. In each iteration, the algorithm assigns higher weights to misclassified examples, making them more likely to be chosen for the next model’s training. This sequential dependency helps boosting focus on challenging cases, gradually refining the overall prediction accuracy.

Weighted Training Instances in Machine Learning

In machine learning, weighted training instances refer to the practice of assigning a specific weight to each data point in the training set, indicating its importance during the learning process. This technique is especially prevalent in ensemble methods like boosting, where the model iteratively focuses on challenging examples to enhance overall performance.

Purpose of Weighted Training Instances

The primary objectives of assigning weights to training instances include:

-

Emphasizing Hard-to-Classify Instances: Increasing the focus on examples that previous models misclassified, encouraging the algorithm to learn from its mistakes.

-

Balancing Class Distributions: In imbalanced datasets, assigning higher weights to minority class instances to prevent the model from being biased toward the majority class.

-

Incorporating Prior Knowledge: Reflecting the varying importance of different data points based on domain-specific insights.

Implementation in Boosting Algorithms

Boosting algorithms, such as AdaBoost, utilize weighted training instances to iteratively improve model accuracy:

-

Initialization: Assign equal weights to all training instances.

-

Training Weak Learner: Train a base model (weak learner) on the weighted dataset.

-

Error Calculation: Evaluate the model’s performance, identifying misclassified instances.

-

Weight Update: Increase the weights of misclassified instances and decrease the weights of correctly classified ones.

-

Iteration: Repeat the process, with each subsequent model focusing more on previously misclassified examples.

This iterative weighting mechanism ensures that the ensemble model progressively concentrates on challenging cases, leading to improved accuracy.

Applications and Benefits

Weighted training instances are beneficial in various scenarios:

-

Handling Imbalanced Data: By assigning higher weights to minority class instances, models can achieve better performance on imbalanced datasets.

-

Robustness to Noise: Reducing the influence of noisy or outlier data points by assigning them lower weights.

-

Improved Generalization: Focusing on hard-to-classify instances helps the model generalize better to unseen data.

Challenges of Weighted Training Instances

While weighted training instances offer significant advantages, they also present challenges:

-

Computational Complexity: Updating weights iteratively can be computationally intensive, especially with large datasets.

-

Overfitting Risk: Excessive focus on misclassified instances may lead to overfitting, where the model performs well on training data but poorly on new data.

How Boosting Works

The boosting process can be outlined as follows:

-

Initialize Weights: Assign equal weights to all training instances.

-

Train Weak Learner: Develop a weak learner using the weighted dataset.

-

Evaluate Performance: Assess the weak learner’s accuracy on the training data.

-

Update Weights: Increase weights for misclassified instances and decrease weights for correctly classified ones.

-

Aggregate Learners: Add the weak learner to the ensemble with a weight proportional to its accuracy.

-

Iterate: Repeat steps 2–5 until a specified number of weak learners are trained or the error reaches a minimum threshold.

This iterative approach ensures that each subsequent learner focuses more on the instances that previous learners found challenging, thereby enhancing the model’s overall accuracy.

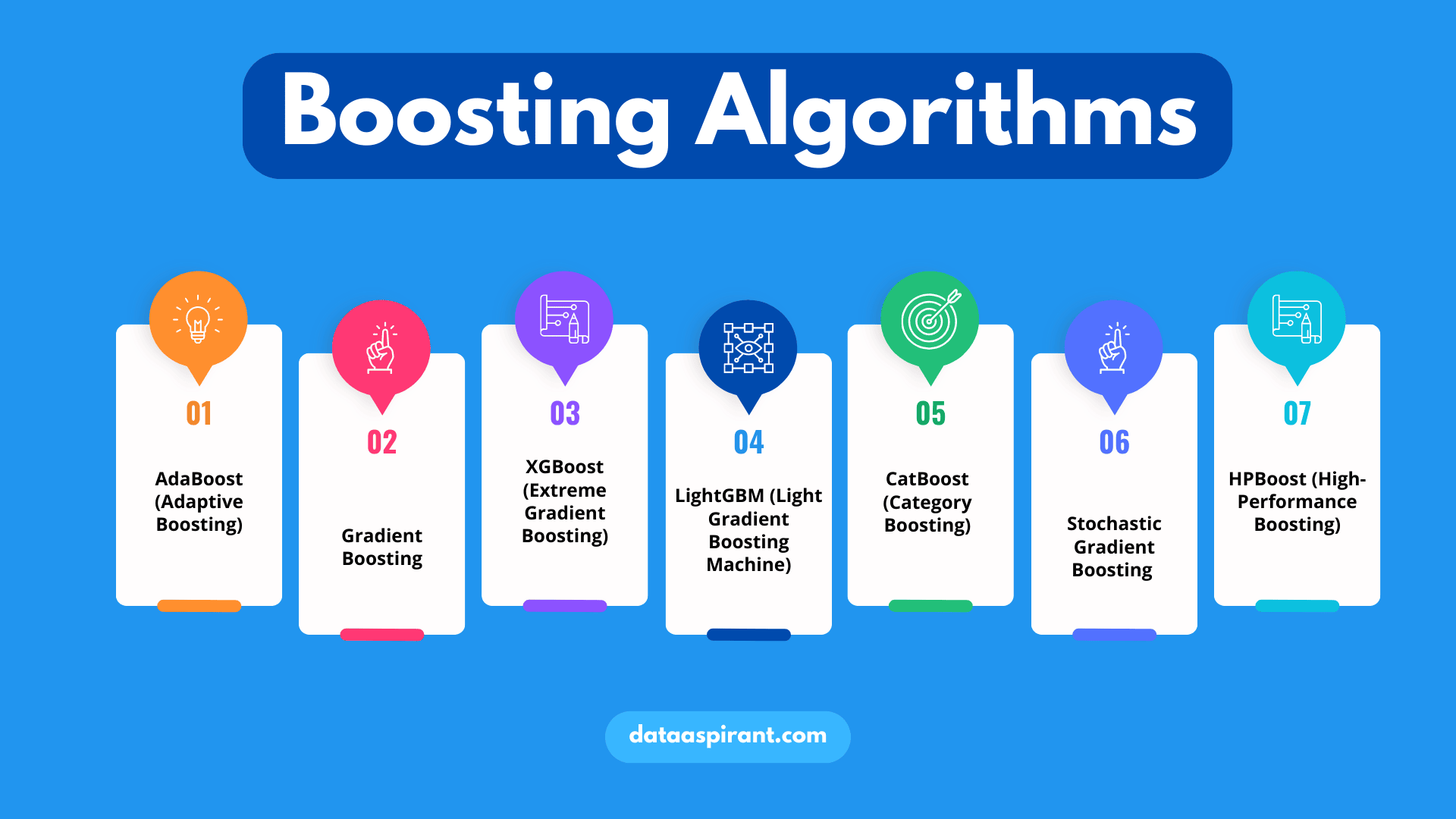

Boosting Algorithms

Several algorithms implement the boosting concept, each with unique methodologies:

Adaptive Boosting (AdaBoost)

Developed by Freund and Schapire, AdaBoost combines weak classifiers sequentially, adjusting the weights of misclassified instances in each iteration. It is particularly effective for binary classification tasks and has been extended to handle multiclass and regression tasks.

Mathematical Formulation of AdaBoost

AdaBoost adjusts the weights of training instances to focus on difficult cases:

-

Initialization: Assign equal weights to all instances:

\[w_i^{(1)} = \frac{1}{N} \quad \text{for } i = 1, 2, \ldots, N\]where $N$ is the total number of training instances.

-

Training: Train the weak learner $h_t$ using the weighted dataset.

-

Error Calculation: Compute the weighted error rate $\epsilon_t$:

\[\epsilon_t = \frac{\sum_{i=1}^N w_i^{(t)} \cdot I(y_i \neq h_t(x_i))}{\sum_{i=1}^N w_i^{(t)}}\]where $I(\cdot)$ is the indicator function, $y_i$ is the true label, and $h_t(x_i)$ is the prediction.

-

Model Weight: Determine the weight $\alpha_t$ of the weak learner:

\[\alpha_t = \frac{1}{2} \ln\left(\frac{1 - \epsilon_t}{\epsilon_t}\right)\] -

Weight Update: Update the weights for the next iteration:

\[w_i^{(t+1)} = w_i^{(t)} \cdot \exp\left(\alpha_t \cdot I(y_i \neq h_t(x_i))\right)\]Normalize the weights so that they sum to 1:

\[w_i^{(t+1)} = \frac{w_i^{(t+1)}}{\sum_{j=1}^N w_j^{(t+1)}}\]

This process repeats for a predefined number of iterations or until a desired performance level is achieved.

Gradient Boosting

Gradient Boosting extends the boosting concept by adding models to minimize a loss function, typically through gradient descent. This approach allows for greater flexibility in error correction and is widely used for regression and classification tasks. Modern variations, such as XGBoost and LightGBM, offer optimizations that make Gradient Boosting scalable to large datasets.

Mathematical Formulation of Gradient Boosting

Gradient Boosting builds an ensemble model by sequentially adding new models that reduce a specified loss function. The steps for Gradient Boosting are as follows:

-

Initialization: Initialize the model with a constant prediction:

\[F_0(x) = \arg \min_\gamma \sum_{i=1}^N L(y_i, \gamma)\]where $L(y, F(x))$ is the loss function.

-

Compute Pseudo-Residuals: For each instance, compute the pseudo-residuals, which represent the negative gradient of the loss function with respect to the model’s predictions:

\[r_i^{(t)} = -\left. \frac{\partial L(y_i, F(x_i))}{\partial F(x_i)} \right|_{F(x) = F_{t-1}(x)}\] -

Fit a Weak Learner: Train a weak learner $h_t(x)$ to predict the pseudo-residuals $r_i^{(t)}$.

-

Update the Model: Update the model by adding the predictions of the weak learner, scaled by a learning rate $\eta$:

\[F_t(x) = F_{t-1}(x) + \eta h_t(x)\]where $\eta$ is the learning rate, controlling the contribution of each weak learner.

-

Iterate: Repeat steps 2–4 for a predefined number of iterations or until the loss function converges.

Gradient Boosting minimizes the loss function iteratively by fitting weak learners to the gradients, making it suitable for complex, nonlinear relationships.

Applications of Boosting

Boosting algorithms have been successfully applied in various domains:

-

Object Categorization in Computer Vision: Boosting methods are used to combine weak classifiers based on image features, enhancing object recognition accuracy.

-

Handling Imbalanced Data: By assigning higher weights to minority class instances, boosting helps in addressing class imbalance issues.

Challenges and Considerations

While boosting offers significant advantages, it also presents challenges:

-

Computational Complexity: The iterative training and weight updating can be computationally intensive, especially with large datasets.

-

Overfitting Risk: Excessive focus on misclassified instances may lead to overfitting, where the model performs well on training data but poorly on new data.

Understanding these aspects is crucial for effectively implementing boosting algorithms in machine learning tasks.

Application of Boosting in Detecting AI-Generated Text

Boosting algorithms, particularly AdaBoost and Gradient Boosting, can be highly effective for detecting AI-generated text. Since AI-generated text can often exhibit subtle, complex patterns, boosting methods allow the model to focus iteratively on the challenging cases that may be difficult to identify using simpler models. Key benefits of using boosting in this context include:

-

Enhanced Pattern Recognition: By iteratively focusing on misclassified examples, boosting helps the model capture nuanced patterns typical of AI-generated text.

-

Reduced Bias: Boosting helps reduce bias, which is beneficial when the AI-generated and human-generated text may be quite similar. The model can iteratively adjust to capture subtle discrepancies.

-

Customizable Loss Function: With Gradient Boosting, we can customize the loss function to focus specifically on characteristics that differentiate AI-generated text, such as unusual phrase patterns or lexical diversity.

-

High Predictive Accuracy: Boosting algorithms, especially XGBoost and CatBoost, have a strong track record for classification tasks in NLP, making them suitable for AI-generated text detection.

Example

Suppose we have a dataset containing both AI-generated and human-generated text. By training a boosting model (e.g., AdaBoost) with decision tree stumps as weak learners, the model could learn to focus on common misclassification cases, such as syntactically correct but semantically odd phrases, which are more common in AI-generated text.

Summary Boosting offers a robust approach for distinguishing between AI-generated and human-generated text by focusing on hard-to-detect patterns, making it a valuable tool for maintaining content authenticity and improving AI accountability in natural language processing tasks.